The AI Paradox

AI Pollution: The Hidden Crisis Shaping Our World

Why Labeling AI Content Now is Critical

Reading Time: 7–8 Minutes

Why labeling AI-generated content is no longer optional, it’s a survival imperative.

Once AI creates content faster than humans can label it, the battle is lost.

The AI Paradox

Imagine a world where your morning news is crafted by an algorithm, your political debates are fueled by deepfakes, and your doctor’s diagnosis is powered by a neural network. This is not science fiction, it’s the reality we’re building, already here. But here’s the catch: AI pollution, the unchecked proliferation of unlabelled AI-generated content, is creating a crisis that could redefine our society. From disinformation to ethical dilemmas, the stakes are higher than ever. The question isn’t if AI will shape our future, but how we’ll govern it. And the answer starts with one simple act: Compulsory labeling of every AI-generated content.

AI pollution as a new form of environmental and societal issue. Worth mentioning the rapid advancement of AI and its unintended consequences.

What Is AI Pollution?

AI pollution refers to the unregulated spread of content created by artificial intelligence or humans which train AI, often without clear disclosure. Think of it as a digital wildfire: AI-generated text, images, and videos are flooding the internet, blurring the lines between reality and fabrication. The problem isn’t just about what AI creates, it’s about who controls the narrative and how we trust the information we consume.

Why It Matters

- Algorithmic bias in training data can perpetuate harmful stereotypes.

- Deepfakes and AI-generated disinformation can manipulate public opinion.

- Generative AI tools like ChatGPT, Claude, Deepseek or DALL·E produce content that’s hard to distinguish from human-made work.

- AI hallucinations (false or misleading outputs) can mislead scientists, politicians, and patients.

How AI Pollution Affects Us All

-

Everyday Life: The Digital Footprint of AI

From personalized ads to AI-curated social feeds, our daily lives are shaped by algorithms. But when AI-generated content is unlabelled, it becomes a digital footprint of uncertainty. For example, a viral post claiming a “miracle cure” (very common in social media) could be AI-generated, misleading users into harmful actions. Algorithmic transparency is no longer a luxury, it’s a necessity. -

Science: AI in the Lab, Out of Control

In research, AI is a game-changer. But when AI-generated data is used without labels, it can skew results. Different studies have found that AI-generated data led to inaccuracies. Neural networks trained on biased data can produce flawed predictions, endangering everything from weather forecasts to drug development. -

Politics: The Battle for Digital Democracy

AI pollution is a tool for manipulation. Politicians can use deepfakes to create fake speeches, or AI-generated ads to spread disinformation. A simple internet search shows that the 2024 U.S. election saw a 30% increase in AI-driven disinformation, with generative adversarial networks (GANs) creating realistic political speeches. Without labeling, the digital democracy we rely on is at risk. -

Healthcare: AI Diagnoses and the Cost of Misinformation

AI is revolutionizing medicine, from imaging diagnostics to personalized treatments. But if AI-generated data is unlabelled, it could lead to medical hallucinations. We may see cases where an AI system misdiagnoses a patient due to faulty training data, leading to delayed treatment. AI stewardship is critical to prevent such errors.

The Narrative Crisis: AI and the Storytelling Revolution

AI is reshaping storytelling, from novels to movies. But when AI-generated narratives are unlabelled, they can distort reality. For instance, AI-generated historical fiction might rewrite history to fit a particular agenda. The narrative integrity of our culture is at risk. Algorithmic accountability is needed to ensure AI doesn’t become a tool for propaganda.

You can think of AI pollution as the “carbon footprint” of artificial systems. It arises from:

- AI-generated content that distorts reality

- Algorithmic bias: models trained on flawed data, perpetuating inequities

- AI-written articles, tweets, and ads that flood digital spaces

AI systems are trained on massive datasets, often scraped without consent from the internet. This creates a feedback loop: the more data AI consumes, the more it amplifies societal biases and misinformation. For example, facial recognition tools have shown higher error rates for darker-skinned individuals, entrenching systemic racism.

Misinformation on Steroids

Social media algorithms prioritize engagement, not truth. AI-generated content fuels:

- Fake News: AI writes articles faster than fact-checkers can respond.

- Bias amplification: models inherit flaws, medical algorithms underdiagnose certain demographics, worsening healthcare gaps.

The Reproducibility Crisis

AI models like GPT-4 are “black boxes”, even their creators don’t fully understand how they decide. This undermines scientific rigor and accountability.

Moral Hazard

Who is responsible when AI goes wrong? A self-driving car killing a passenger? A chatbot spreading harmful stereotypes? Current laws lack clarity.

Politics and Geopolitics

Deepfakes as CyberWeapons

Erosion of Trust

Global Power Struggles

Disinformation Ecosystems

- The “Boil the Frog” Strategy

Disinformation spreads incrementally. First, AI generates a fake climate change denial post. Then, it amplifies it via microtargeting. By the time science activists react, the damage is done.

The Role of Big Tech

Platforms like Facebook and X (formerly Twitter) profit from engagement, even if it’s harmful. AI accelerates their algorithms’ viral tendencies, creating a moral dilemma.

Healthcare’s Silver Lining and Shadow

- Diagnostic Errors: biased algorithms missing rare diseases in marginalized groups.

- Patient Distrust: a wrong AI diagnosis could irreparably harm trust in medicine.

- Data Exploitation: medical AI trained on patient data without consent.

The Battle for Truth and Narrative

“Post-Truth” to “AI-Truth”

If AI can fabricate, what’s the role of fact-checking? Experiments showed AI-generated essays beating human students in plagiarism checks.

Echo Chambers and Identity Politics

AI tailors content to reinforce beliefs, deepening polarization. Imagine a world where AI only shows you content that aligns with your existing views, and generates opposing extremes to radicalize you. By the way, that’s exactly what’s happening with every major social media platform out there.

Labeling AI, A Lifeline for Truth

Why Labels Matter Now

- Transparency: if every AI post is tagged, users can contextualize it.

- Regulation: laws like the EU’s AI Act demand “clear, machine-readable” labels.

- Ethical Accountability: brands using AI-generated ads must disclose it, or face backlash.

The Danger of “Label Lag”

Once AI creates content faster than humans can label it, the battle is lost. TikTok’s AI-driven trends outpace moderation teams. We need global standards now.

Global Governance and the Race for AI Arms Control

The Paris Agreement for AI

Can nations agree on binding limits? For now, the U.S., China, France, and Russia compete to build “AI superiority,” ignoring the pollution toll.

The Role of Smaller Nations

Countries like Singapore and India are pioneering AI ethics frameworks. Will they lead or follow?

Conclusion: A Call to Action

AI pollution isn’t inevitable, it’s a choice. We can regulate it, label it, and demand ethical AI. Otherwise, we risk a future where algorithms dictate truth, humanity’s creativity is outpaced by code, and democracy becomes a relic.

Start Today:

- Demand labels for AI content.

- Support AI ethics in education.

- Spread awareness, because soon, there’ll be no tomorrow for AI if we don’t act.

Keywords: AI pollution, deepfake, algorithmic bias, disinformation, AI labeling, healthcare AI, political deepfakes, algorithmic accountability, AI ethics, AI-generated content.

CRS (Compulsory Regulatory Stop):

- Consentless Creation: AI generating content without user permission.

- Digital Exhaust: data waste from AI training processes.

- Narrative Warfare: AI-driven disinformation campaigns.

By tackling AI pollution head-on, we can ensure technology elevates humanity, rather than eroding it. The time to act is now.

Author: Klaudi Bregu

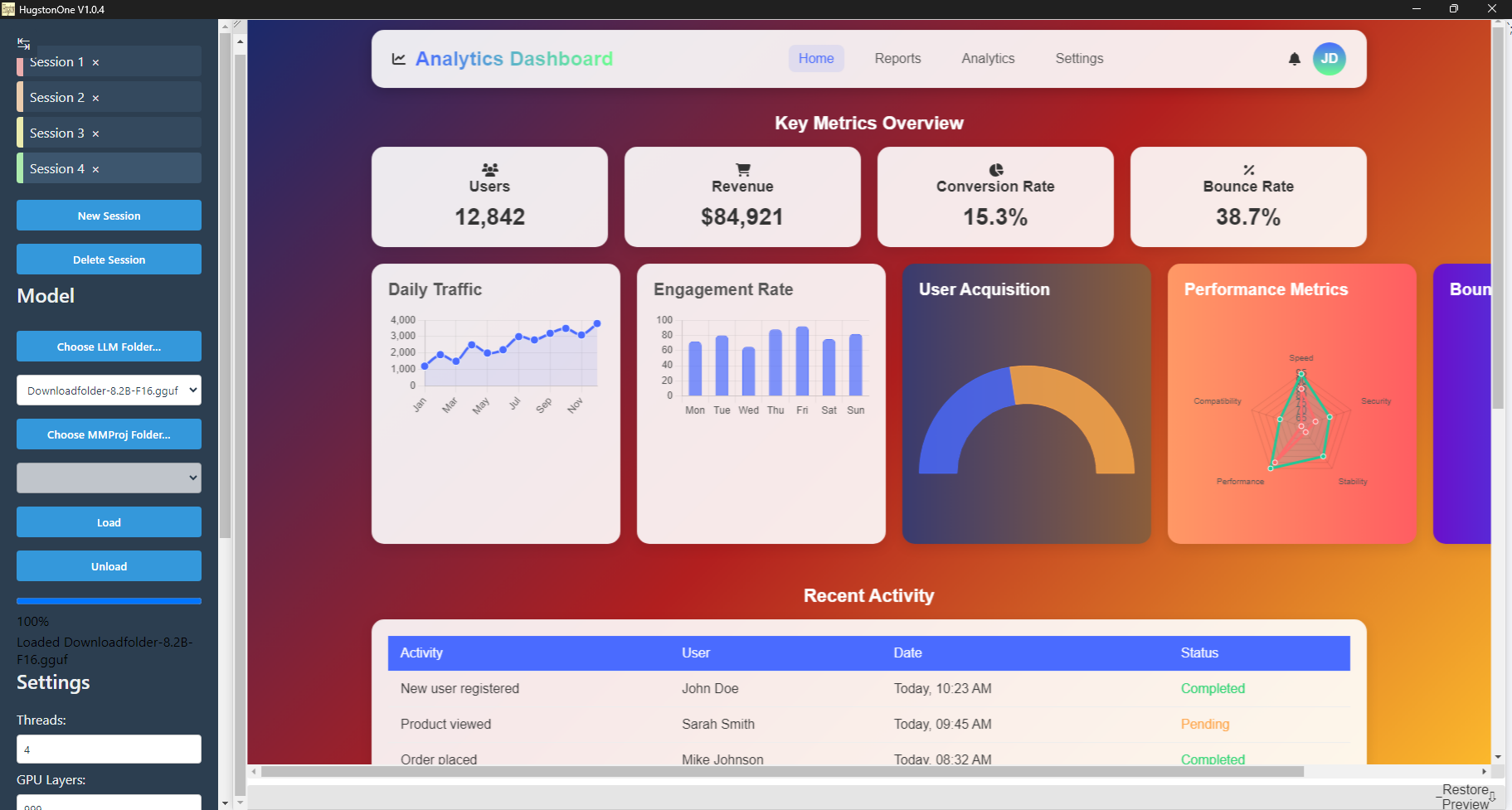

Founder of Hugston.com and HugstonOne app.

Empowering Journeys, One Story at a Time